Lec 1: Introduction

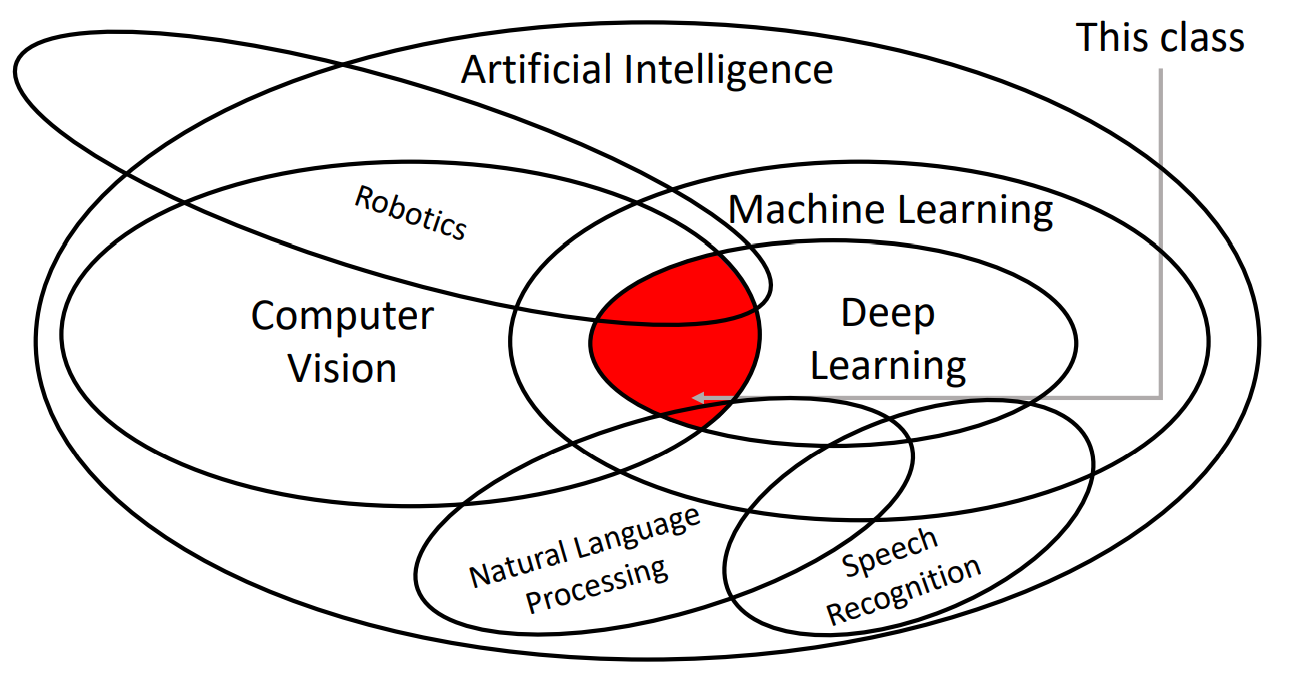

What is "deep learning for computer vision"?

History of Computer Vision

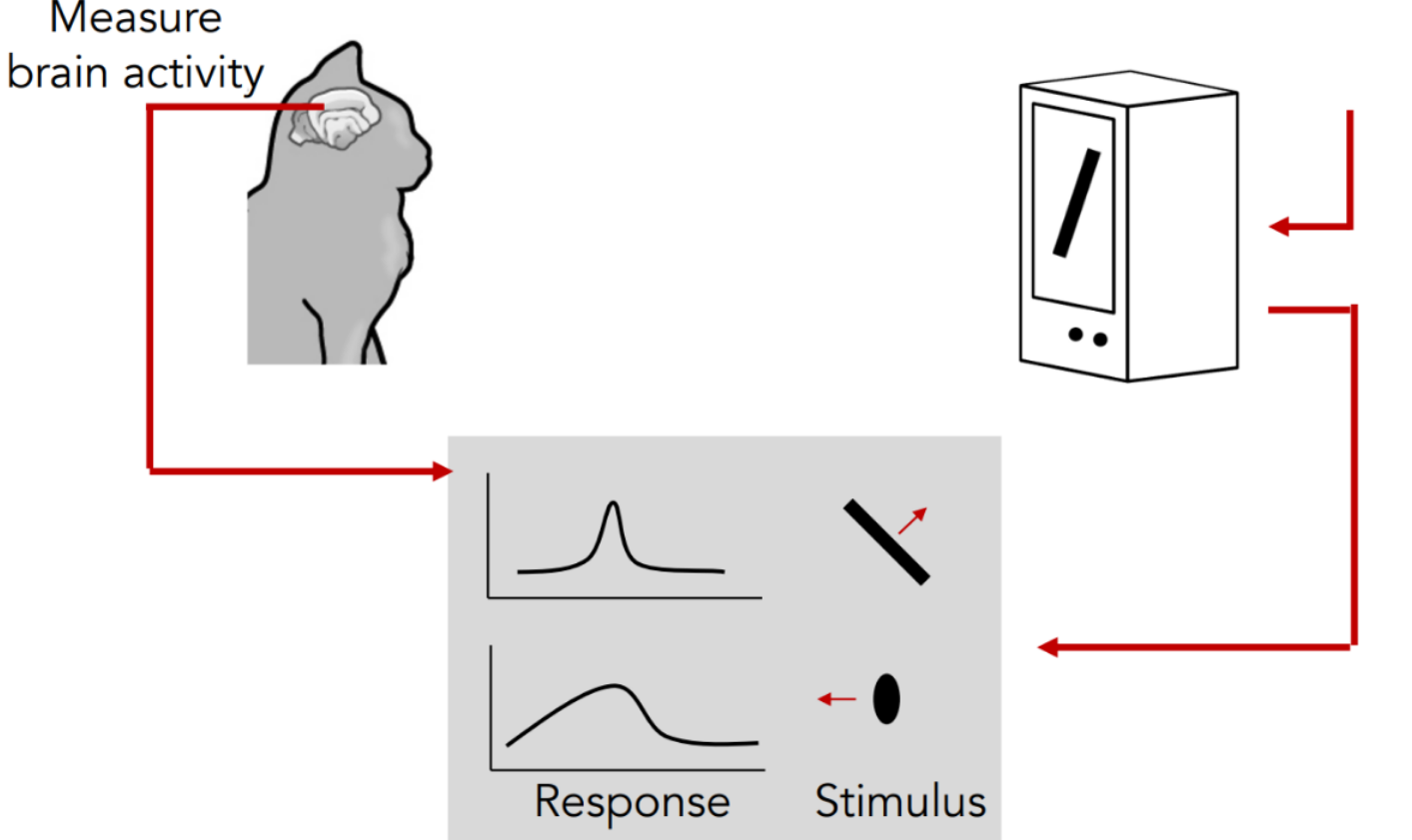

Hubel & Wiesel, 1959

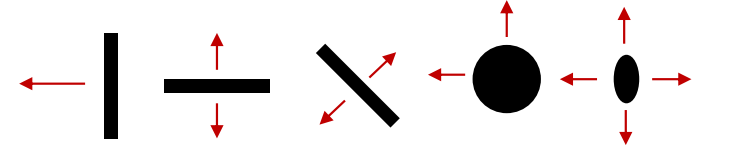

- Simple cells:

-

Response to light orientation

-

Complex cells:

-

Response to light orientation and movement

-

Hypercomplex cells:

- response to movement with an end point

Thus, this research tells us that light orientation is important, and edges are fundamental in visual processing.

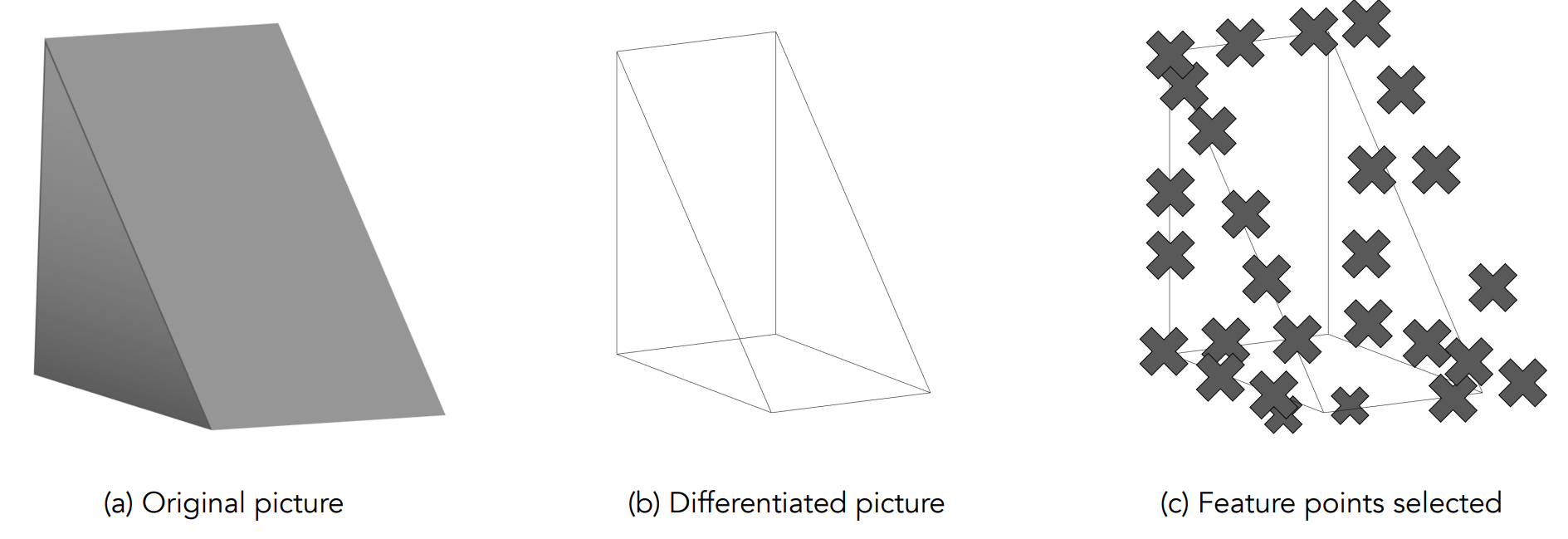

Larry Roberts, 1963

It is commonly accepted that the father of Computer Vision is Larry Roberts, who in his Ph.D. thesis (cir. 1960) at MIT discussed the possibilities of extracting 3D geometrical information from 2D perspective views of blocks (polyhedra).

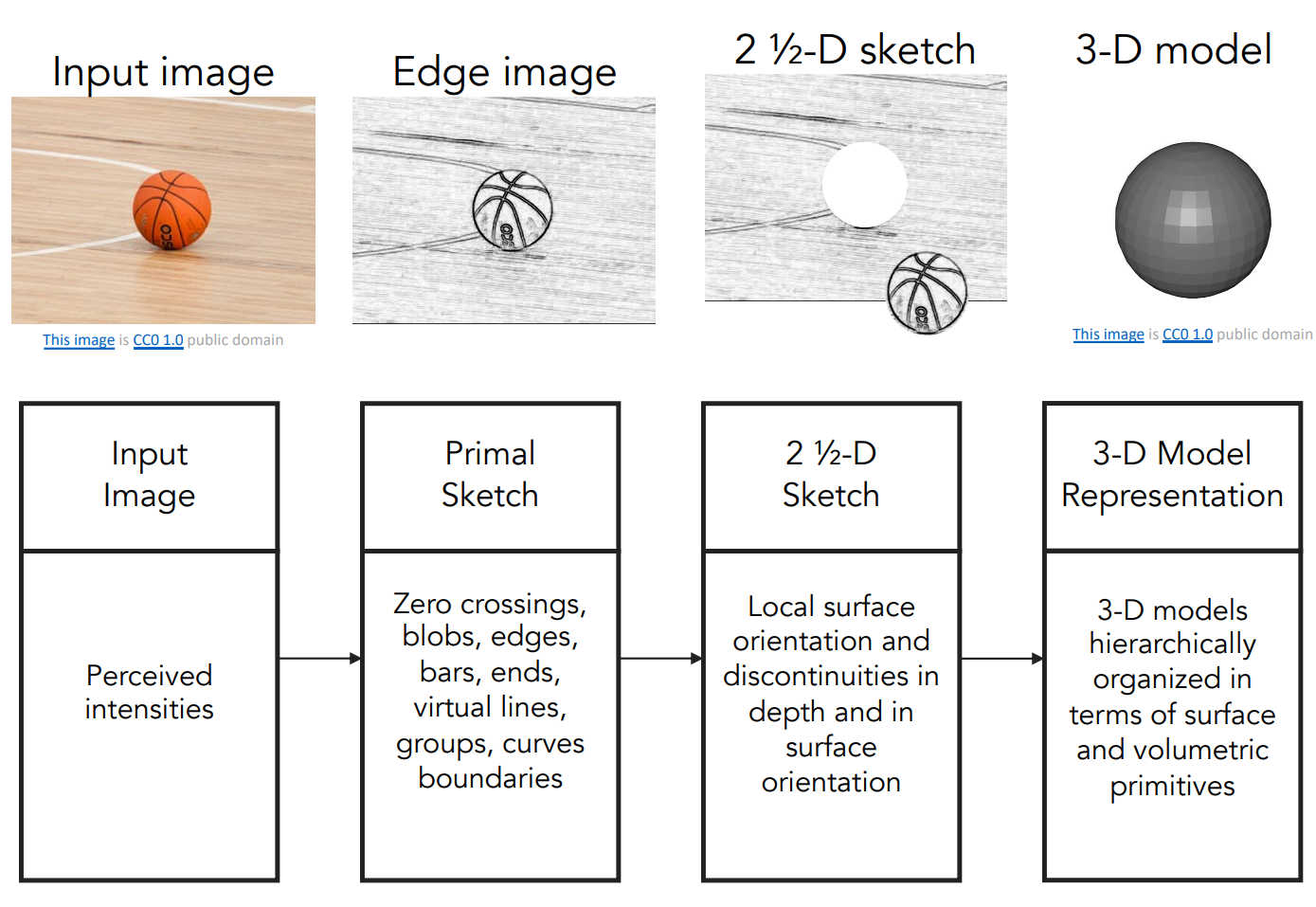

David Marr, 1970

David proposed four stages of visual processing:

- Primal Sketch: find the edges, etc of the raw image

- 2 1/2-D Sketch: find the depth of the raw image, and identify the object in the edge image

- 3-D model: translate the 2 1/2-D object to 3-D object

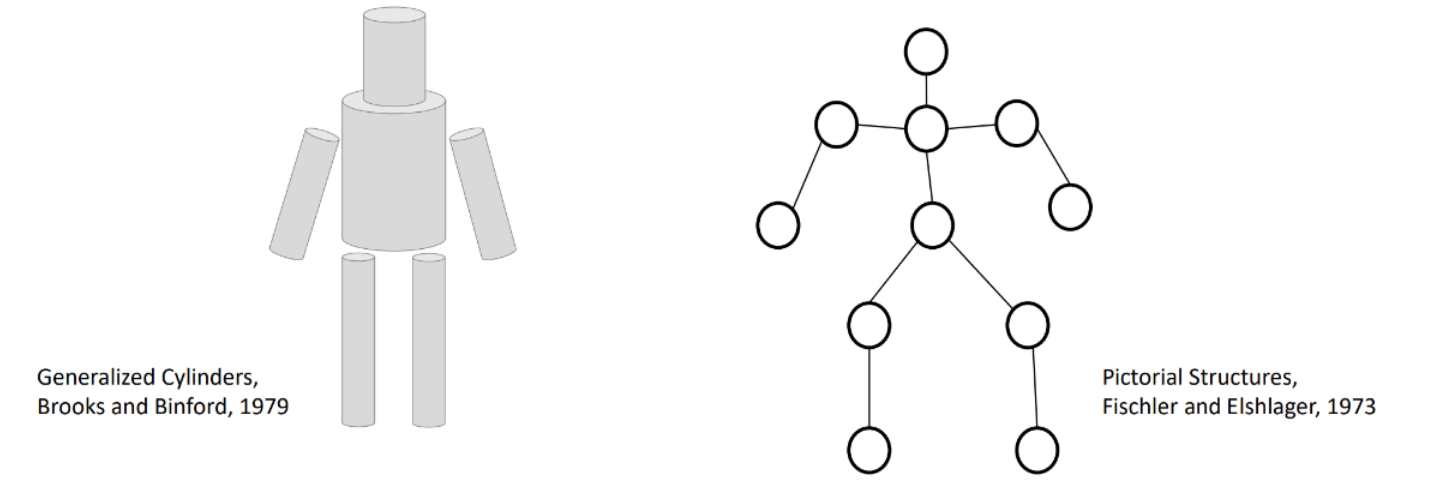

1970s and onwards

In the 1970s, many algorithms that recognize an object that contains many "parts", such as the robot shown below. We recognize each part by edges, and combine them topologically to form the object.

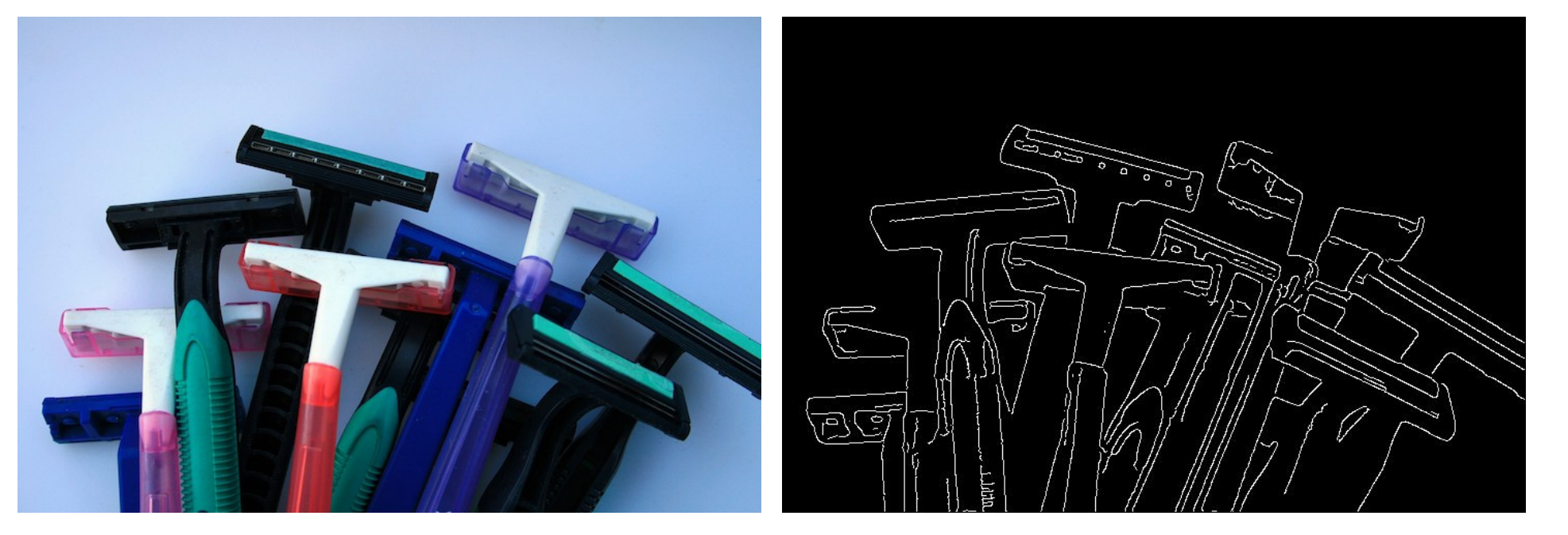

In the 1980s, the camera is way better and edge detection is now very plausible.

Like the image below, you can have a template of a razor, and you are able to find all razors by matching edges.

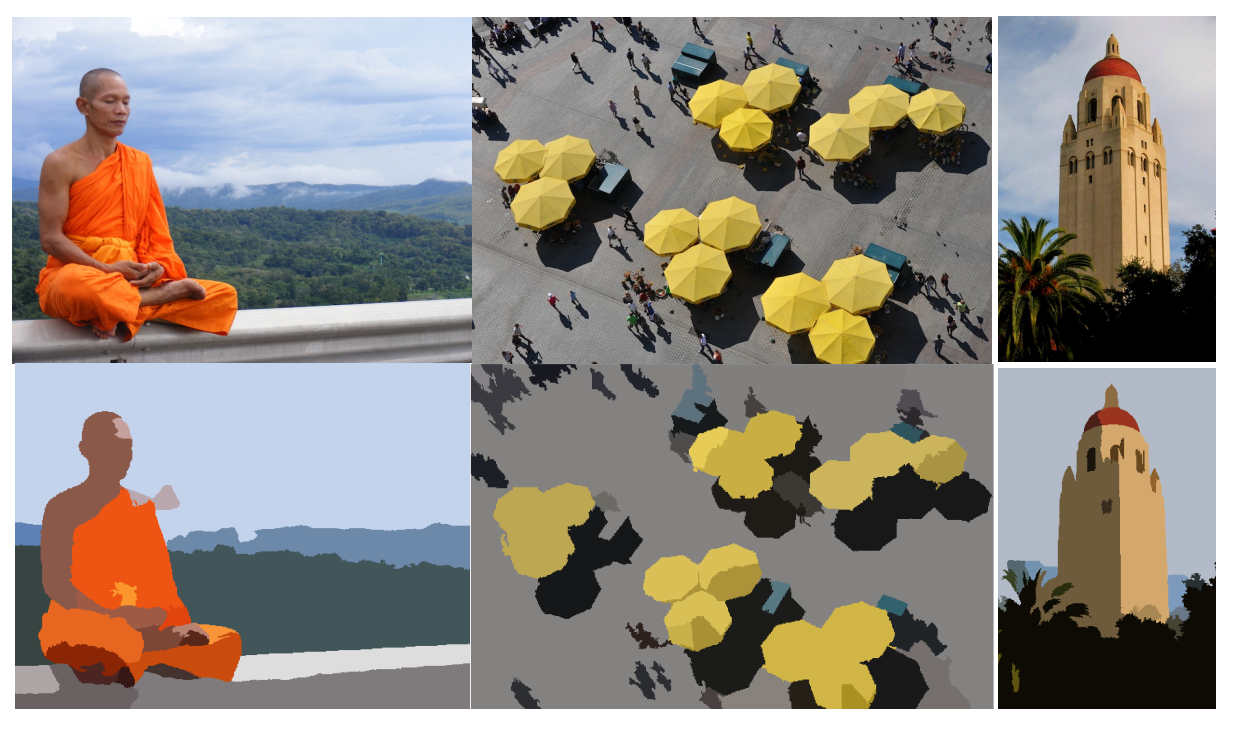

In the 1990s, computer scientists try to break the image into several semantically meaningful segments instead of just do edge matching.

In the 2000s, recognition via matching is more and more popular.

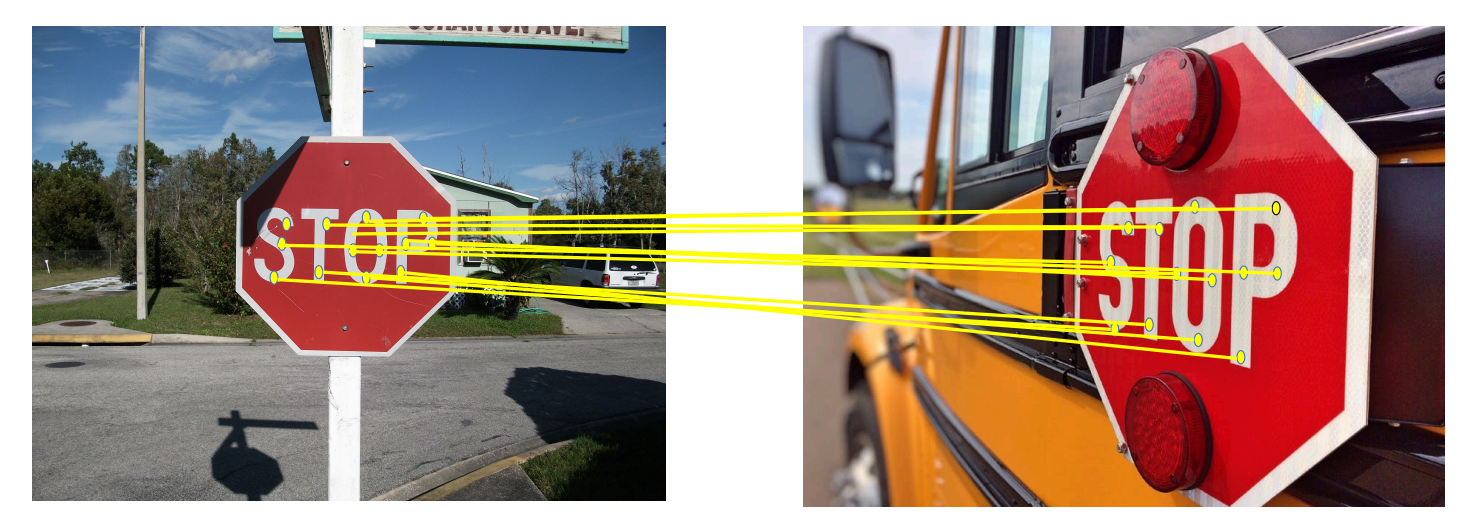

The SIFT (Scale-Invariant Feature Transform) algorithm first detects key points in an image,

- which typically exhibit good invariance to local features such as texture, edges, or corners.

Then, feature descriptors are extracted within the local regions surrounding each key point.

- These descriptors are vectors encoding information about the image around the key points and possess invariance properties to scale, rotation, and illumination changes.

- These feature descriptors are utilized in subsequent tasks such as image matching and object recognition.

Facial Detection

Viola and Jones proposed a very practical algorithm in 2001. It's one of the first successful applications of machine learning to vision.

- Because it uses boosted (a slightly modified version of AdaBoost) decision trees.

History of Machine (Deep) Learning

The development of machine (deep) learning has a timeline that is almost parallel to computer vision. Before 2012, many people also have tried to apply ML techniques in CV, but all of them failed.

It's

- algorithm: AlexNet

- data: Big Data Era

- computation: Moore's Law

that contribute to the success of AlexNet in CV in 2012.